More from the series

Blog Post

This blog is part three of a series on the findings of survey experiments with policymakers in 35 developing countries. Read part one in the series here and part two here.

Does research change the minds of education policymakers in the developing world?

It’s easy to list many reasons that education policy in low- and middle-income countries might not reflect the best available evidence on ‘what works’. Policymakers are politically constrained in what they can do. And policy choices that seem baffling to policy wonks may reflect underlying disagreement about policy goals. But sometimes, perhaps, policymakers just aren’t aware of what research shows, or even—gasp!—simply aren’t persuaded by the evidence they’re presented.

Last year we conducted a survey of over 900 senior officials in Ministries of Education (or related government agencies) in 35 low- and middle-income countries. We surveyed them to understand how and by whom their priorities and perceptions are influenced, as well as to elicit their priorities for education policy and spending in their countries and their views on aid donors.

Here, we discuss our findings on what officials look for in research and how it might affect their policy priorities.

Education officials were more likely to be convinced by bigger samples and relevant contexts

As the number of impact evaluations of programs and policies in low- and middle-income countries grows, so too do concerns about external validity and the scalability and replicability of studies. And it seems that policymakers and policy implementers may share this concern.

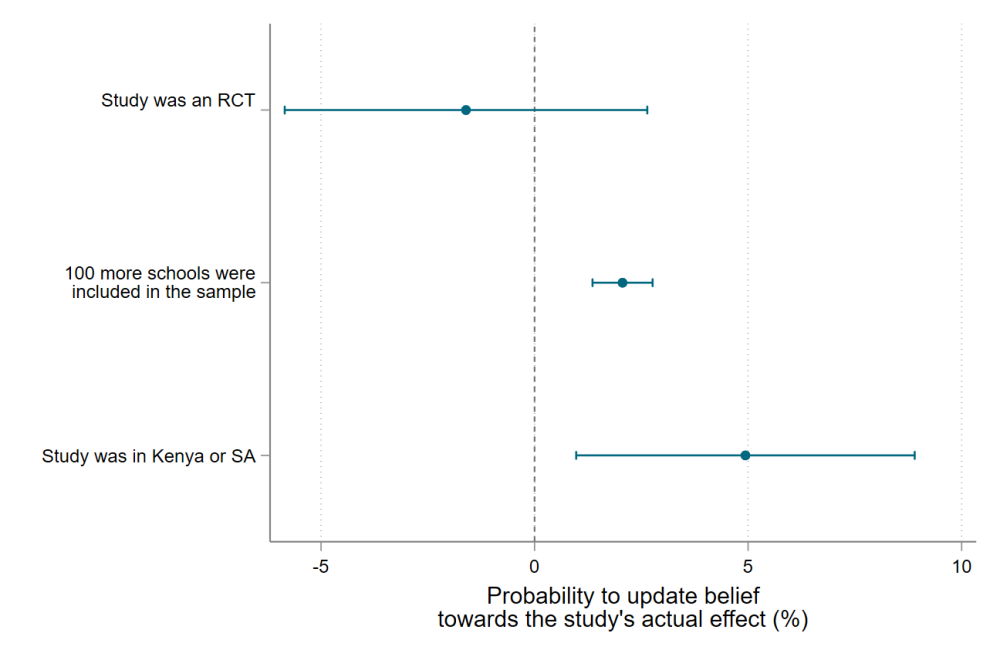

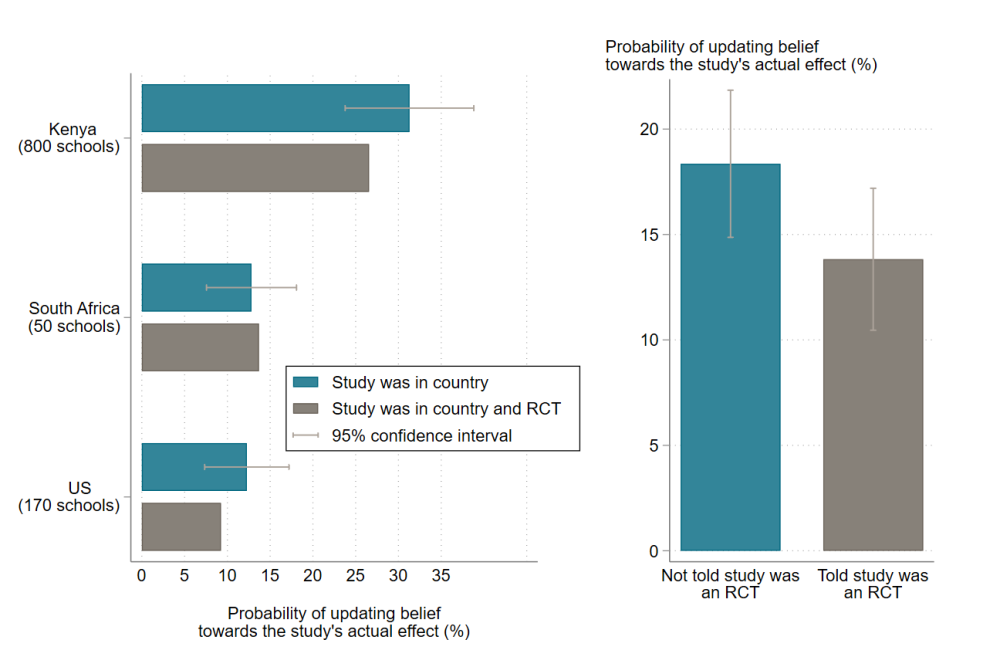

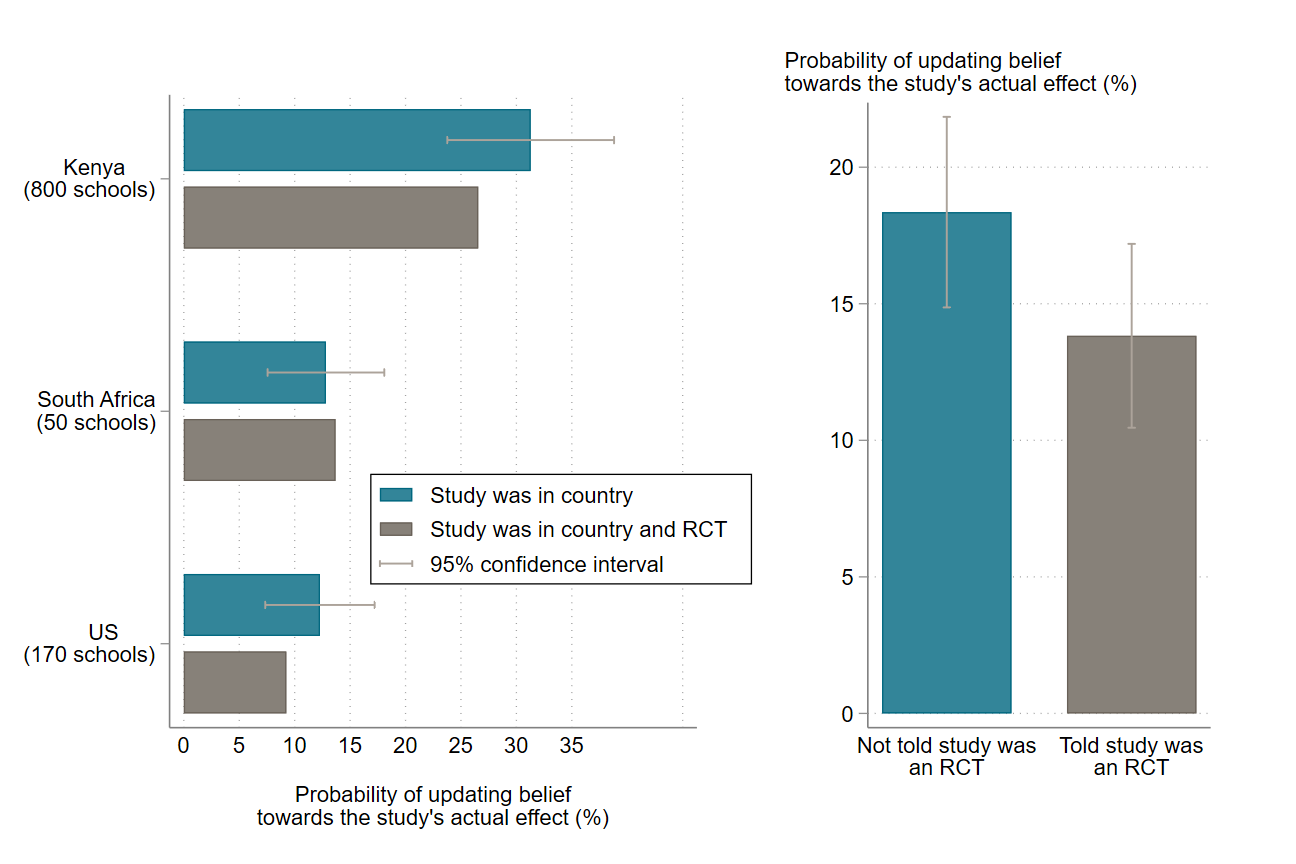

To measure what kind of evidence convinces policymakers to update their beliefs and inform policy, we ran an information experiment in our survey. We presented them with a real study on scripted lesson plans, describing the sample size and the location of the study and asked the officials to guess the effect size of the program. We randomized the location of the study, presenting one of three studies to each respondent: a study with 50 schools in South Africa or one with 800 schools in Kenya. Also, while all of these studies were randomized control trials (RCTs), we only revealed that fact to a random subset of respondents.

After telling the officials how big of an effect the study had found, we asked them what they thought the effect size would be if the project was replicated in their country. If the study’s characteristics convince policymakers that the evidence is credible to inform policy, we should observe them “changing” the effect size they guessed before to a new estimate that’s closer to the study’s true effect. The amount that officials update their belief (updated the effect size they guessed before) is represented by how much they move their estimation of the effect size toward the study’s actual results once its true effect is revealed.

We found that officials are more likely to update their beliefs when presented with evidence from another low- or middle income country—i.e. Kenya or South Africa. And, they were more likely to update their beliefs if the study had a large sample size—approximately 15 percent more with a sample of 800 schools rather than 50 schools, or two percentage points per additional 100 schools.

Figure 1. Officials were more likely to update their beliefs when presented with a study with a large sample size from another low- or middle-income country

Note: coefficients of a Linear probability model. Lines indicate 95 percent confidence intervals.

Our findings contribute to an emerging literature on this topic. In the US, for example, a recent study by Harvard Ph.D. candidate Nozomi Nakajima showed that education policymakers are almost 11 percentage points more likely to use research with a sample size of 2,000 rather than of 500 students and 14 percentage points more likely to use research with 10 rather than only one school. They also prefer studies in settings with poverty rates and racial composition similar to their own. Similarly, Jonas Hjort (Columbia), Diana Moreira (UC Davis), Gautam Rao (Harvard), and Juan Francisco Santini (Innovations for Poverty Action) show that in Brazil, municipal mayors are willing to pay more for larger sample studies. However, contrary to our findings and the study of policymakers in the US, the Brazilian mayors do not have a preference for studies from similar contexts to their own.

Alas, officials were not more likely to be convinced by a randomized controlled trial

RCTs have gained significant traction and recognition within the international development sector. But in our survey, we found telling officials in our sample that the study was an RCT had no effect on the probability of them updating their beliefs (figure 1 and figure 2). Likewise, the experiment with education policymakers in the US found a small but statistically insignificant preference for experimental studies or RCTs over a correlation study.

Figure 2. Telling officials the study was an RCT had no effect on updating beliefs

Note: Bars represent percent of respondents that updated belief towards study's true effect grouped by the information they received. Lines indicate 95 percent confidence intervals.

Interestingly, Nozomi Nakajima’s work in the U.S. finds that every item American education policymakers correctly answered on scientific reasoning including items on causality and confounding variables increased their probability of choosing an experimental study by 1.3 percentage points. So, one reason why officials and policymakers do not prefer RCTs may be because they are familiar with its concept, but have not fully understood the rigour of the method.

Like anyone, and the way that evidence is presented to them makes a difference. For instance, Eva Vivalt from the University of Toronto and Aidan Coville at the World Bank find that policymakers are more likely to update beliefs with imprecisely estimated “good news” rather than “bad news” (an asymmetric optimism behavior bias) and are more certain about the results than the evidence would support particularly when confidence intervals are larger (overconfident behaviour bias).

With policymakers’ preferences and behavioural biases in mind, the global education sector may want to consider investing in larger, well-powered studies, and investing more in local or national research institutions. A small-scale RCT from another country may be unlikely to convince policymakers to change their minds. Of course, convincing policymakers is not the only reason we run RCTs: randomization allows for credible causal inference, and as researchers we should worry about getting the right answer before we fret about convincing other people to believe us. But knowledge does not always translate into policy action and so aid donors should consider the range of research they need to invest in to both build knowledge and influence national policy making.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.