The research organization Aid Data has been getting a lot of attention in the aid world of late with its survey of recipient country policymakers and practitioners and their views of the utility, influence and helpfulness during reform of various aid agencies. Suggests the press release: “According to nearly 6,750 policymakers and practitioners, the development partners that have the most influence on policy priorities in their low-income and middle-income countries are not large Western donors like the United States or UK. Instead it is large multilateral institutions like the World Bank, the GAVI Alliance, and the Global Fund to Fight AIDS, Tuberculosis and Malaria." That conclusion is based on average worldwide agency scores from the survey.

The Aid Data survey was a much overdue and valuable exercise that should be very useful for donors trying to improve the impact of their policy advice. The ratings of recipient countries on how useful and helpful for reform they find donor advice alongside how much donors set the agenda should be a goldmine for aid researchers, especially when more data is released. But here’s where three fundamental methodological concerns arise:

- In many cases, the rankings are based on a tiny sample of self-selected respondents, giving considerable weight to a handful of respondents from sectors and countries where agencies are relatively un-engaged.

- Due to a quirk in the methodology, the top-ranked aid agencies were often the ones that survey respondents knew the least about, like LuxDev, Luxembourg’s development agency. And if you listen to what most respondents said about most aid agencies – i.e., they’d never worked with them or never heard of them – the picture of which donors are most influential looks a lot different.

- The cost effectiveness calculations only reward aid agencies for average user satisfaction per dollar spent, with no consideration of how many users the agency reached.

Admittedly, finding a sample of policymakers from around the world is inherently difficult. The Aid Data questionnaire had a sampling frame of 54,990 and was received by 43,439 people. The number who replied was 6,731 (out of whom 3,400 were from host governments). With 126 recipient countries involved, that’s about 53 responses a country (including about 27 government employees). As you can imagine, not every recipient has experience with every agency. Indeed, all over the world, 2,715 (40 percent of respondents, 5 percent of sample frame) reported experience with the World Bank, 1,676 with DFID, 37 with Luxembourg and 17 with the GAVI Alliance. That last number is less than 0.3% of respondents or less than 0.04% of people who received the survey. Aid Data weights the global scores in two ways: first so that global scores are the average of average scores at the country level (imagine there were two responses in Brazil and three in Nigeria for a total of five worldwide. The two Brazilian responses would be averaged, the three Nigerian scores would be averaged, and Aid Data would report the average of the two country scores as its global score). Within each country, responses in each policy area are given the same weight even if the number of respondents is different – so the average of six health sector answers are treated the same as the average of two education sector answers. In effect, this increases the influence on rankings of scores given by respondents in sectors and countries where a donor is relatively (but not completely) unengaged as measured by recipient responses.

The fourteen respondents who ranked the utility of GAVI’s advice –out of the 17 that had experience with the organization—score it at a (country-sector weighted) average 4.038 on a five-point scale. That’s the highest average for any agency scored. The 1,468 respondents who had experience with the World Bank scored it at (a country-sector weighted) 3.697. The 18 who scored Luxembourg put it at 3.683, which puts it at sixth place just behind the World Bank in the Aid Data rankings. To take those three scores, it would be a brave statistician who compared responses from a question answered in (at most) one out of seven of their sample countries by a total of 0.03% of recipients with a question answered in every sample country by a total of 3.4% of recipients.

The AidData report doesn’t include confidence intervals for most of the estimates -- they decided not to report them so as to maximize readability and simplicity.

But roughly speaking, to get a 95% confidence of plus or minus 5% on the survey scores, you’d need 382 responses. That’s being generous and assuming the respondents are a genuinely unbiased cross section of the 54,990 people of interest for every scoring question used in the rankings -- contrary to lots of evidence in the report.

Another approach would be to use the non-answers as information that an agency is not useful or agenda setting. We asked the team at Aid Data and they said that non-replies don’t count as a declaration that the respondent thinks an agency is useless or has no impact on the agenda, because respondents were told only to grade agencies if they had worked with that agency. Still, if a respondent has no interaction with an aid agency, that agency clearly hasn’t been of much use or very helpful to the respondent (even if they might think it was helpful in general terms). And that no one reports experience with an agency surely speaks to its “agenda setting” potential (the Illuminati and the Bilderberg Group notwithstanding): it seems unlikely that the agency has had a considerable impact on the agenda of someone who has never worked with them in any way. So there might be some justification in coding non-responses as an answer equivalent to the bottom of the scale (i.e., not influential, not helpful, etc.).

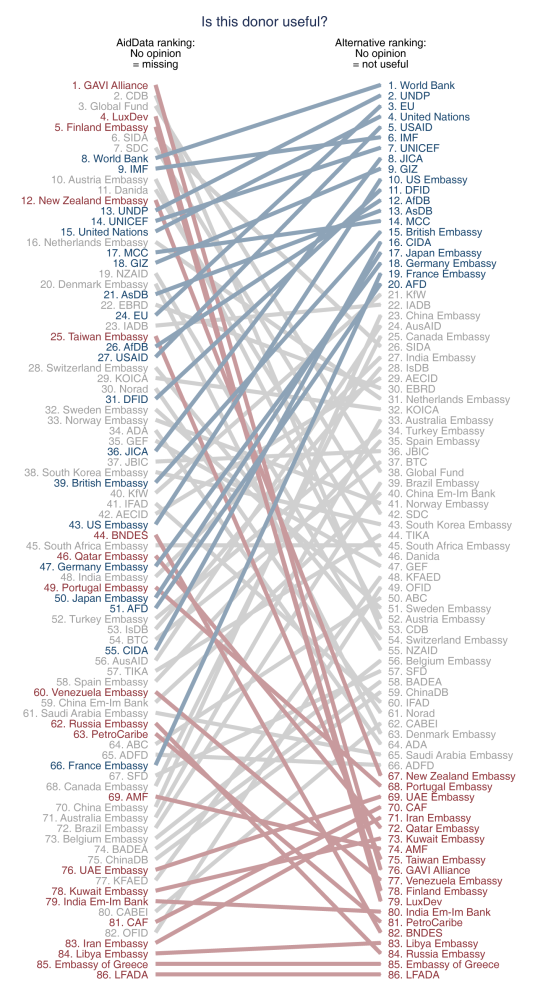

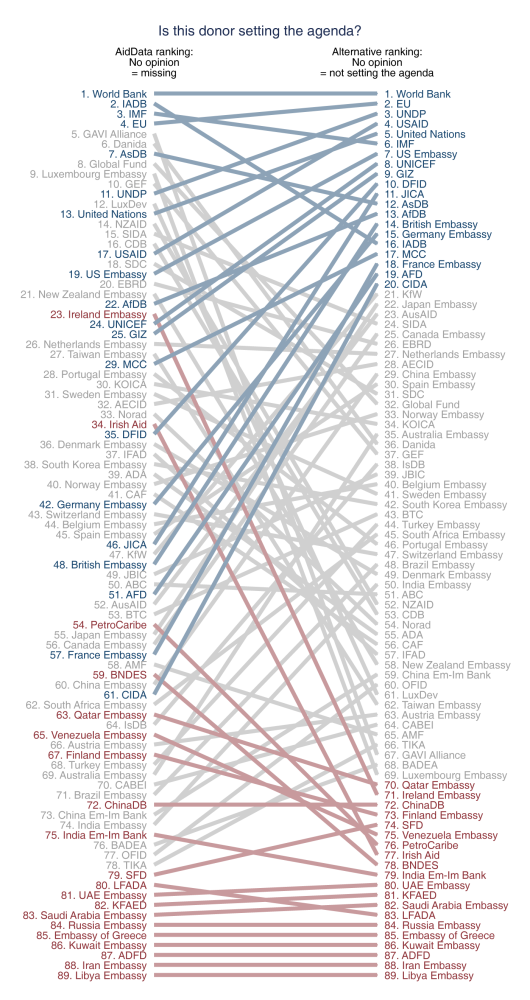

In the graphs below, we re-rank donor agencies using the Aid Data survey, but treating ‘don’t know’ as zero (and shifting all the responses onto a 0-4 scale instead of 1-5).

Unfortunately, we don’t have access to the actual survey data, so we can’t apply (or undo) the survey weights Aid Data used. That’s because the terms of Aid Data’s human subjects approval from the College of William and Mary’s IRB prohibit them from sharing the respondent-level data and Aid Data hasn’t published the number of respondents per agency per country and sector that we would need to replicate (or undo) their weighting system. For the time being, they are only sharing data from the survey that is not already in the public domain with collaborators/co-authors.

So for the time being, we’re doing the best we can with the published results. Formally, our jerry-rigged approach will only produce the ‘right’ rankings if the response rate does not correlate with average scores within the country-sector across sectors and countries. We’d welcome the Aid Data team rerunning the exercise to produce the exact numbers using this different methodology.

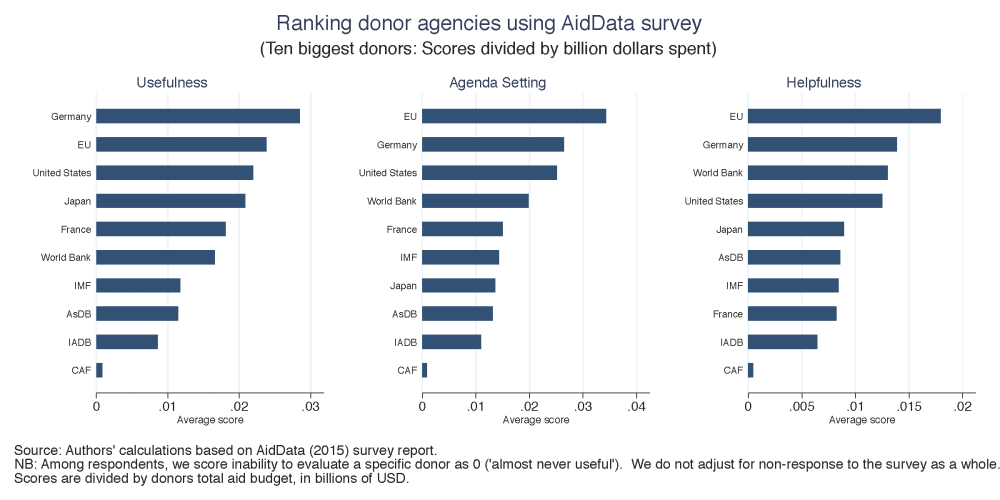

Ranking donor agencies using AidData survey

(Assuming donors you don’t know aren’t helping you)

Source: Authors’ calculations based on AidData (2015) survey report.

NB: Among respondents, we score inability to evaluate a specific donor as 0 (‘almost never useful’).

We do not adjust for non-response to the survey as a whole.

Donor agencies are ranked on three dimensions: usefulness, helpfulness, and whether they’re setting the agenda. In each case, blue font denotes the top twenty performers on our alternative ranking, and red denotes the bottom twenty.

The web of crossing lines indicates that the rankings are very fragile to how we code non-response. In some cases, donor agencies that were at the very top of the AidData rankings – like Gavi, LuxDev, or the Embassy of Finland in the case of “usefulness” – fall all the way to the bottom twenty once we adjust how we treat non-response. To be clear, that’s not to suggest the policy advice of Gavi, LuxDev and the Embassy of Finland isn’t useful to those that work with them –just that the utility of their advice isn’t as widespread as (say) the World Bank’s.

The problem of low response rates looms particularly large when the report starts ranking on "value for money." There, scores on agenda setting are compared to agency budgets. A small budget and large ‘agenda setting’ score is considered evidence of punching above your weight. But if only seventeen people worldwide have any experience with your agency compared to 1,468 for another agency, even if those seventeen say your agency is good at setting the agenda is it really evidence of greater cost effectiveness? In most countries in the sample, simply no-one will have said anything about Luxembourg’s agenda-setting potential and yet it is ranked on a global scale next to the World Bank for which almost every country will have a score. Perhaps Luxembourg has a small budget and sets the agenda fantastically in three small island states while the World Bank has a large budget and does not do quite so well at setting the agenda for India, China, Brazil and Nigeria. Which one is better value for money? Again, using the approach of giving an agency a zero score for each non-response rather than throwing out those data points, we can re-calculate the ‘agenda setting per dollar’ of the top ten agencies (though note early caveats about weighting still apply):

For all of the reasons above, we’d be very cautious in suggesting our alternate results are ‘right’ –or even necessarily ‘righter.’ And other approaches (re-scoring answers on the underlying questionnaire scales, trying to account for bias introduced by country or type of respondent to produce ‘demographically representative’ scores, using different weights and so on) could dramatically change rankings again. But our results do suggest that the top-placed agencies on the Aid Data rankings might want to be modest about their position –it might be due to a lot of other factors than greater efficiency in turning aid resources into positive and impactful policy change.

So fear not, Greece. Despite your donor agencies scoring a lowly 1.69 based on nineteen answers to the usefulness of advice question, placing you in 57th and last place behind Libya’s donor agencies, perhaps you are still offering great value for money. And, to repeat, the Aid Data survey exercise remains extremely valuable in helping give pointers towards making advice even more effective –so here’s hoping they do it again, even bigger, and better!

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.