by

April 28, 2009

It’s often said that the perfect is the enemy of the good. But in the area of program evaluation, the enthusiasm for a humanitarian program can lead to wide dissemination of optimistic results even if they are based on a flawed evaluation technique. In this case, the bad is the enemy of the good – because poor quality evaluation can deflect interest from good evaluation. Members of Congress will want to consider this cautionary note when it comes time for confirmation hearings for Eric Goosby as Global AIDS Coordinator and head of the President’s Emergency Program for AIDS Relief, or PEPFAR, and ask about his plans for supporting better evaluation of this important program.

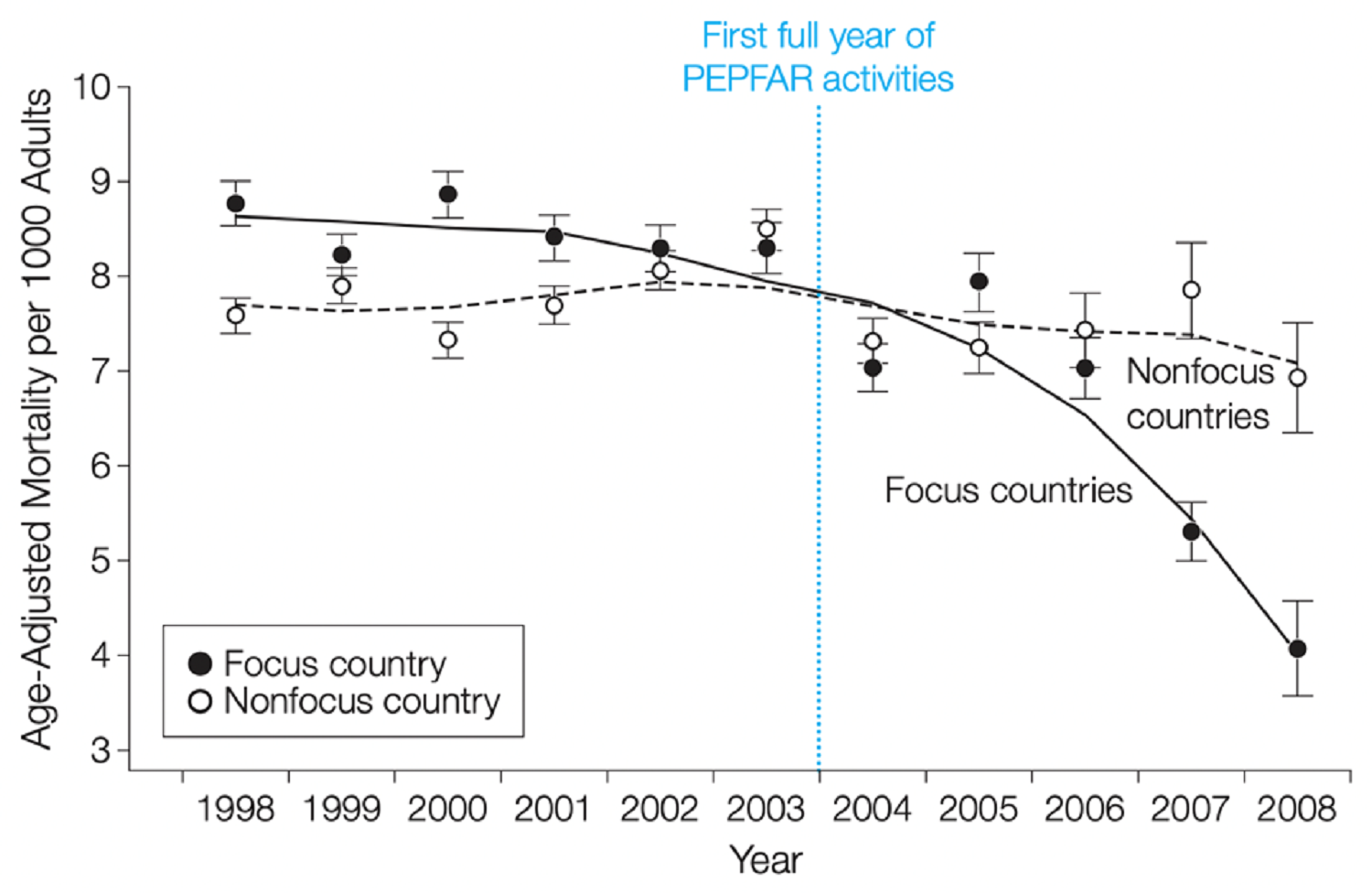

On April 9 the Washington Post published an editorial on the success of PEPFAR not only in placing people on treatment but in reducing mortality in the PEPFAR countries. In support of the latter assertion they cite an article that is due to appear in the May 19 issue of Annals of Internal Medicine and is already available online. In the words of the Washington Post’s editorial staff, “[T]he authors of the study, Eran Bendavid of Stanford University and Jayanta Bhattacharya of the National Bureau of Economic Research, concluded that "about 1.2 million deaths were averted because of PEPFAR's activities." Unfortunately, this conclusion is far from warranted by either the original authors or by the Post.

Bendavid and Bhattacharya arrived at their conclusion by comparing the mortality trends since the start of antiretroviral therapy in the 12 African PEPFAR focus countries with 29 other African countries. But how did they get data on mortality in those 41 countries? Perhaps readers thought that the authors had compared vital registration data in the 41 countries, but unfortunately none of these countries systematically collects data on the births and deaths of its residents. Readers might have guessed that the authors had used census data to measure mortality, but unfortunately only a few have conducted censuses in the last five years. Or readers might have reasonably conjectured that the authors had compared mortality estimates from systematic sample surveys such as the Demographic and Health Surveys that exist for many countries, but the authors did not attempt this exercise. A careful reading of their “methods” section reveals that they simply used the latest version of the UNAIDS/WHO data on AIDS mortality, available here.

The authors don’t raise the question of where UNAIDS might have gotten its data on mortality. The answer is that UNAIDS projected its mortality estimates. This is a perfectly respectable activity. I do it myself for the future (here and here), and UNAIDS’ job was to use known treatment enrollment data to project mortality into the past. The trick is to assume one can generalize from the various small studies on treatment success (summarized in Table 1 of Stover et al. here) and then to infer the trend in national AIDS mortality from that assumption and from national estimates of the number of patients enrolled.

But it is tautological for Bendavid and Bhattacharya to use the projected mortality data as proof that treatment is working when the mortality data itself has been generated by ASSUMING that treatment is working.

As I mentioned in my Q&A of November 2006, it was fairly surprising that the 2006 issue of the UNAIDS annual data compendium showed an increase in the number of AIDS deaths in Sub-Saharan Africa despite the fact that one million persons had been placed on anti-retroviral therapy. The most likely explanation is that in the rush to get out the first annual report since treatment had become so widespread, the UNAIDS team responsible for mortality projections had not yet entered into their model the number of people on treatment. Neither I nor anyone else would have concluded that AIDS treatment rollout had caused an increase in AIDS mortality.

Since 2006, UNAIDS has worked hard to make the country data on AIDS mortality consistent with national estimates of treatment enrollment and the best guesses available on treatment’s benefits. The 2007 update, which I blogged, revised all the data to incorporate treatment uptake and the 2008 update, available here, provides retrospective estimates of mortality and prevalence which the UNAIDS team has “backcast” to be consistent with treatment numbers over the last few years as well as with current lower prevalence estimates.

The UNAIDS data team is to be congratulated on producing a comprehensive revision of their data on HIV prevalence, treatment enrollment and mortality. They have done their best to make these data internally consistent using the latest estimates of survival with and without antiretroviral therapy. But no one should mistake their mortality projections as evidence that treatment is working. We need to have REAL data on the success of treatment in order to judge how well it’s working and to make the case for its continued support.

My colleague Nandini Oomman has recently published a memo to President Obama urging the release of PEPFAR data on contracting. I join her in that request – and further ask that PEPFAR’s data on effectiveness and unit costs also be released. This data has been collected with billions of US taxpayer funding. What is the justification for keeping it from the public?

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.