Recommended

Blog Post

Blog Post

At CGD, we have been closely watching the development of the new Pandemic Fund which aims to strengthen pandemic prevention, preparedness, and response (PPR) capabilities in low- and middle-income countries (LMICs). The Pandemic Fund recently published a Results Framework to guide project-level monitoring, evaluation, and learning efforts. The framework is a clear indication of its commitment to ensuring the effectiveness of the Fund’s interventions.

While the Pandemic Fund should be lauded for its relatively quick launch and willingness to course-correct in its inaugural year, the Results Framework—and in particular its indicators—have room for improvement in future funding rounds. In this blog, we argue that the Result Framework’s use of the Joint External Evaluation (JEE) is not fit for purpose, due to the JEE’s use as a diagnostic tool rather than a performance framework.

The Pandemic Fund was not created solely to ensure country compliance with the International Health Regulations (IHR), but more broadly for pandemic preparedness writ large. Its Results Framework for grants made through the Pandemic Fund should not, by default, be tied to the JEE and SPAR scores. The Pandemic Fund could carefully select a smaller set of indicators which are independently verified and constitute true performance outcomes, potentially tied to specific types of surveillance approaches chosen by a country for its grant.

For ensuring additionality, the Results Framework could examine increases in country spending based on government expenditure on public health as a proportion of government expenditure, thus reflecting the priority a government assigns to public health within its government budget.

As background, early expressions of interest indicate a tremendous demand of and need for $5.5 billion in the areas of surveillance, laboratories, and human resources. But countries will have to make do with scarce funding, just $300 million for this first Call for Proposals that closes in May. Decisions on selections will be announced by the Governing Board in July.

The Results Framework assesses progress against metrics that are not fit for purpose

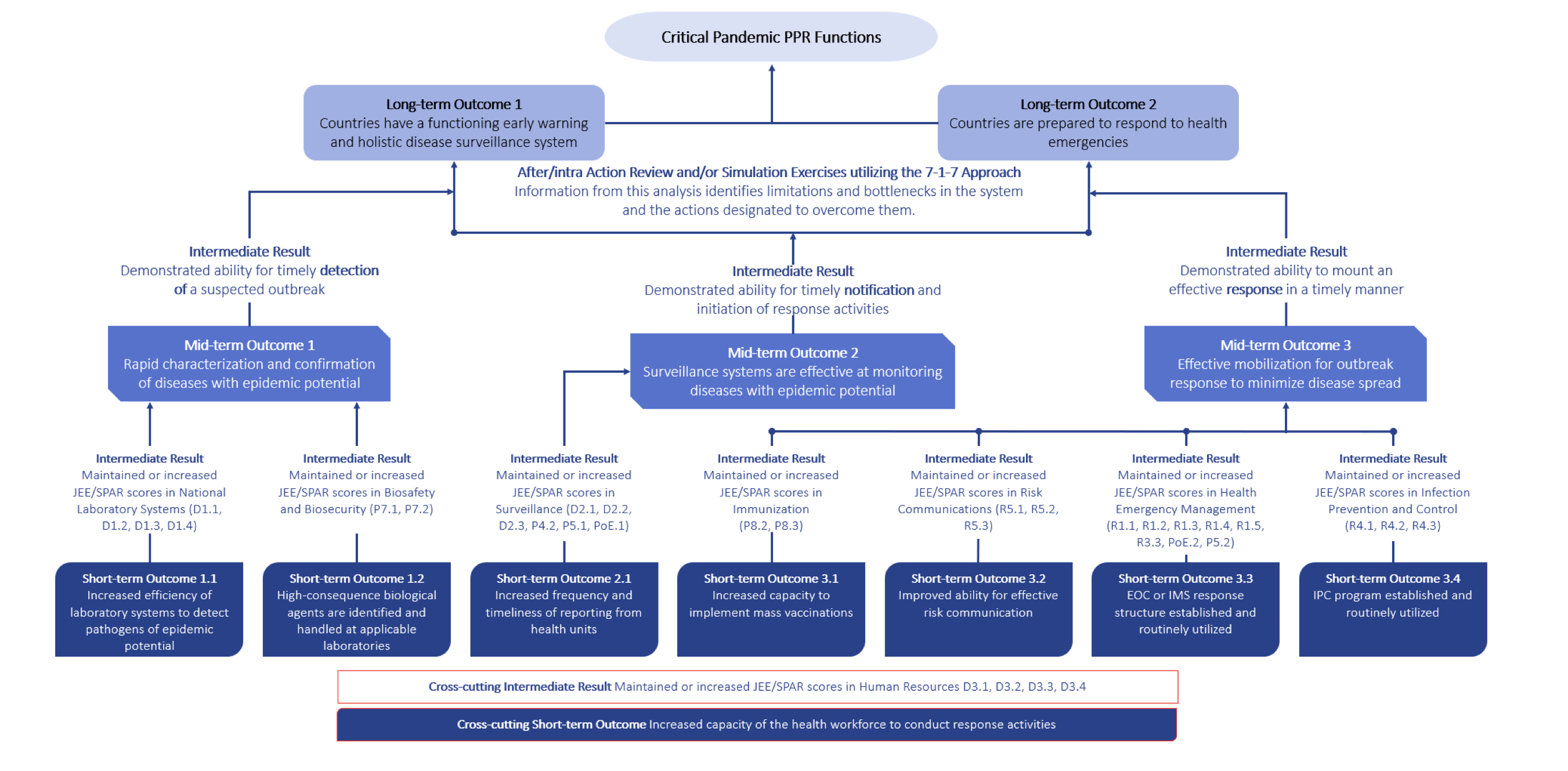

The Results Framework is composed of four targeted results or “Elements”: (1) Building capacity/demonstrating capability; (2) Fostering coordination nationally (across sectors within countries), regionally, and globally; (3) Incentivizing additional investments in PPR; and (4) Ensuring administrative/operational efficiency of Pandemic Fund resources (see Figure 1 below). The proposed framework uses JEE for several indicators under Element 1, but we argue that the JEE is not suitable.

Figure 1. The Pandemic Fund’s Results Framework proposes to assess progress against maintained or increased JEE/Self-Assessment Annual Reporting (SPAR) scores

Source: Pandemic Fund Results Framework (February 2023)

The JEE is often considered the default instrument to measure pandemic preparedness, in part because of its authority as a WHO tool and its endorsement by member states under the IHR. The instrument, initially developed in 2016, has a clearly defined regulatory purpose—to assess compliance with the IHR.

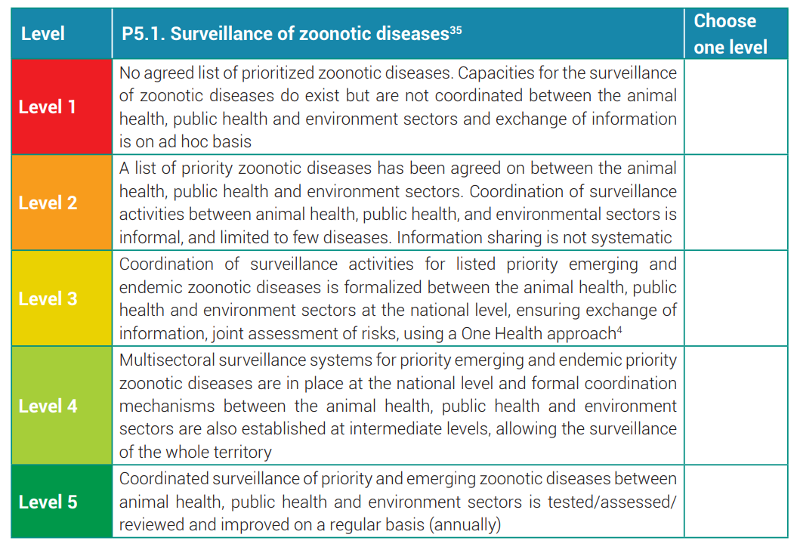

The JEE includes, for example, some measures such as whether a standard operating procedure has been developed for a certain area of preparedness, which guides diagnosis of preparedness progress but does not measure the quality or performance of preparedness. See Figure 2 with a detailed example of one of the indicators (P5.1), one of several listed in Figure 1. As such, it is not a surprise that JEE scores have not accurately predicted country performance against COVID-19—studies looking at cross-country performance against COVID-19 found little to no explanatory power that the JEE was an indicator that countries were better prepared.

That’s not to say that the JEE is not a useful tool to help countries plan for preparedness or track compliance with the IHR, much like a driver’s test does not guarantee that you’ll be a good driver, or going through the shopping list for school supplies does not mean you’ll get good grades in school. But a regulatory purpose does not imply a purpose for measuring performance or results of a grant or as a basis for allocating funding.

Figure 2. A sample JEE indicator: P5.1 Surveillance of zoonotic diseases

Source: Joint External Evaluation Tool: International Health Regulations (2005) (Third Edition, 2022)

The data collected for the JEE and the Results Framework is mostly subjective

Overall, the Results Framework incorporates a significant proportion of qualitative data, requiring expert assessment rather than objective and independently observable or replicable data. Over two-thirds of the Framework’s indicators use qualitative data, whereas just five use solely quantitative data sources. The methods for collecting the data and information used to make assessments might also need a re-think to ensure timeliness and accuracy.

Furthermore, many indicators are dependent on already subjective data sources such as the project’s annual report, although they are “independently verified” through the JEE methodology. And other indicators will be complicated to assess in a meaningful and effective manner—the extent to which the capabilities built by Pandemic Fund projects are sustained after the project has ended, for example, could require a multi-year analysis of projects after their completion.

The JEE/SPAR scores are posted publicly and are theoretically “transparent” (and actually have been for quite some time), but the details for how scores are obtained, and the basis for the scores, are less transparent.

Less is more: having fewer indicators sharpens attention

The abundance of indicators (currently 16) under four distinct elements also makes it difficult for countries to assess the relative importance of each. Alternatively, the Fund could consider paying based on a single key indicator of progress, which also forces attention to a specific outcome. The careful selection of a single outcome indicator is also a key argument of the Cash on Delivery approach, an innovative method to performance-based financing developed by Nancy Birdsall and William Savedoff at CGD.

Bake in evaluation for continuous and adaptive learning

The framework is a start to measure the results of grants. But the journey towards greater pandemic preparedness is a continuous and adaptive learning process, not a one-off once-every-few-years activity. Countries need to make improvements through feedback during times of “peace”, i.e. in the absence of a major pandemic.

CGD has regularly emphasized the importance of independent evaluation to inform learning and evidence-based policy making through its working groups emphasizing the value of impact evaluation (see here and here).

A good example of evaluation is the World Bank’s recent Independent Evaluation Group (IEG) evaluation of COVID-19 projects—which rightfully did not use the JEE framework to classify and evaluate the results of project investments. The evaluation report’s independence as well as mixed methods approach to evaluate the effectiveness of projects, both ex-ante (by investing in proven or evidence-based interventions) and ex-post (by assessing the effectiveness of the interventions) are a reminder of the importance of the development effectiveness agenda for global health and for pandemic preparedness more generally.

The Pandemic Fund should take a cue from the IEG to help bake-in and build evaluation early on into its projects and grants from the get-go. It should not wait for evaluation after projects have been completed or wrapped up.

Ensuring additionality is crucial

The Pandemic Fund has a very targeted role to play with regards to pandemic preparedness, one key role being additionality and incentivizing new investment (which, naturally, the JEE omits). Yet the Fund did not mention any specific ways, standards, or best practices among global health agencies for ensuring additionality or co-financing in its recent guidance as part of the call for proposals.

Ensuring additionality in practice is not easy when examining how governments and their implementing entities spend money. Because the Pandemic Fund will give grants through 13 approved implementing entities (including multilateral development banks, the Global Fund to Fight AIDS, Tuberculosis and Malaria, and United Nations agencies like UNICEF), the grants the Pandemic Fund provides will pass through these implementing entities to governments who are the ultimate recipients. Ensuring additionality will depend heavily on the effectiveness of the co-financing arrangements and mechanisms of each implementing agency. Additionality is also about incentivizing domestic health spending, not additional external aid sources.

In our view, the main kind of additionality to be assessed should relate to improved performance on preparedness (surveillance, laboratories, or human resources), rather than simply financial additionality.

As far as financial additionality goes, this can be assessed ex ante and ex post. Ex-ante, additionality could be assessed in the way that a project is designed—explicit arrangement for co-financing has been set out in the assessment criteria of the Pandemic Fund’s Technical Advisory Panel (TAP). Ex-post, the use of the WHO’s Global Health Expenditure Database (GHED) for detecting additional increases in government spending on health is possible. The WHO GHED is the benchmark methodology and tool for measuring government spending in comparison to external assistance on health.

We urge against the tendency to track whether government spending on pandemic preparedness has specifically increased. Instead, the Pandemic Fund should focus on the overall increases in government spending on public health activities relative to overall government spending, which would be inclusive of core functions of pandemic preparedness.

(As an aside, we also urge against tendencies to attempt to map the JEE to the WHO's GHED which reinforces vertical siloes rather than moves towards horizontal and integrated systems.)

There are various attempts to measure aid flows on these functions—such as the Georgetown Global Health Security Tracker (which maps the flow of funding and in-kind support for global health security), the OECD Total Official Support for Sustainable Development (which measures contributions to international public goods) as well as the Institute for Health Metrics and Evaluation’s (IHME) global development assistance for health. But there are no standardized, universally agreed upon definition of what constitutes pandemic preparedness—for aid or for government spending.

What’s needed is comprehensive tracking of on-budget spending for high-priority public health interventions that addresses severe acute respiratory infections, yet the Results Framework as laid out in the figure below currently omits spending and financing.

Don’t forget the basics: data and health information systems

Surveillance is not merely about the human resources to have field epidemiologists, but also the necessary information technology and data systems that enable the flow of information and communication from across the country. Regrettably, the JEE does not emphasize integrated information systems (along with other areas such as citizen engagement or mechanisms to reach vulnerable groups for example).

One key to successful epidemic surveillance is its ability to report deaths and not only detecting cases. Investing in Civil Registration and Vital Statistics systems (CRVS) is a core part of a country’s multi-component surveillance system and can provide detailed information about a country’s population and death data. CRVS is one of the fundamental activities of public health, crucial for improving health (see here). A functioning CRVS is not only supportive of pandemic preparedness but also for 67 of 231 SDG indicators.

As timely, accurate data on COVID-19 was a recurrent problem for all countries in the world, it is common sense to recognize that the JEE for the most prepared countries also does not capture the essence of having integrated surveillance systems that builds on information technology infrastructure.

By linking progress to improvements in birth registration and death registration coverage, the Pandemic Fund could strengthen core public health surveillance institutions, upon which other types of surveillance and reporting of emerging diseases and ongoing respiratory infections could build.

The work of the Health Data Collaborative hosted by the World Health Organization emphasize the need to implement the health data governance principles which can reduce fragmentation in IT systems and integrate infectious disease data with government-hosted public health data systems more generally for timely, accurate reporting with local and international uses.

The authors gratefully acknowledge comments from Amanda Glassman, Javier Guzman, and several colleagues in the World Health Organization and the World Bank.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.