Recommended

Improving learning outcomes at scale is hard. That may seem obvious, but only recently have policymakers and donors become aware of just how dire—and broad—the learning crisis is. Most of their efforts to improve learning have been pilot programs, and although in some cases it has been possible to improve outcomes at this small scale, it is an entirely different challenge at scale, which can involve thousands of schools—the level at which change must happen to fix the crisis.

What’s needed is a better understanding of how learning improves—that is, the classroom- and system-level ingredients of successful learning at scale.

To get there, CGD is supporting and RTI International is implementing an innovative study we’re calling Learning @ Scale. In this blog post we discuss the process we underwent to find programs that increased learning at scale and offer some reflections on our initial findings.

The selection process

In 2019, our team undertook a worldwide search for compelling examples of where early-grade learning outcomes had been improved at measurable scale. We had a sense that there were many such examples out there, but our challenges were how to find them, rigorously identify them, and ensure that our search was exhaustive enough not to miss any important examples.

We began by selecting a broad set of criteria, which eventually coalesced into a list of 10 key indicators. Initially, we were agnostic as to which academic subject(s) the candidate programs focused on, but we wanted programs that were similar enough that we could draw comparable conclusions. That led us to target programs that included at least a literacy component and that aimed to improve classroom teachers’ effectiveness. Although we began with a more expansive search, we also ultimately narrowed the scope to programs working at the lower primary level. This was in no small part because there were so few large-scale, effective programs at the upper primary, secondary, or tertiary levels.

To be considered, programs had to:

- be based in low- or middle-income countries,

- have learning impact data available for analysis, and

- be able to give researchers access to schools and key personnel for interviews and site visits.

Effectiveness and scale

The most difficult criteria to meet were “effectiveness” and “scale.”

In the case of effectiveness, to be able to make recommendations based on the examples we were studying, we had to make sure the programs led to meaningful improvements in learning. This meant that they had to have conducted an impact evaluation on learning outcomes, preferably using either an experimental or a quasi-experimental design.

That said, there was some inherent conflict between the effectiveness and scale criteria because programs that had been scaled nationally could not be evaluated with a control group. For example, the Tusome Early Grade Reading Activity in Kenya was implemented in every public primary school in the country.[i] In such cases, we relied on previous pilot evaluations and data on improvements in achievement over time as evidence of effectiveness. Another challenge was finding projects that had been evaluated and yet were still in operation. For example, Pratham’s Read India government partnership program had been evaluated in a randomized controlled trial in Haryana State in 2012–2013, but was no longer operating in that state as of 2019. However, large improvements in reading scores in other states—improvements that greatly surpassed national rates—served as strong evidence that similar programs currently functioning there were successful.

We defined scale as “operating in at least two administrative subdivisions” (ideally with full coverage and no fewer than 500 schools), but we were also looking for initiatives that had been integrated with the government education system.

We issued a broad call in April 2019 for program nominations. We contacted many implementing partners, foundations, bilateral agencies, and university and think tank researchers directly, asking them either to name their own projects that could fit our criteria or to help identify other programs. We appreciated the thoughtful responses from so many education researchers and implementers worldwide, which resulted in more than 50 program nominations. After in-depth interviews, we found 16 programs meeting all the basic selection criteria. We shared this list with the Learning @ Scale Advisory Group (consisting of more than a dozen renowned technical experts and convened by CGD). The advisory group shared our concern that the initial list lacked programs from francophone or Arabic-speaking countries, a deficiency which led us to make a second call for proposals. This extra effort unearthed several additional candidate programs, one of which is included in the final list of eight selected programs.

Selected Programs for Learning @ Scale

| Program | Timeline | Country | Lead Implementer | Donor |

|---|---|---|---|---|

| Scaling Early Reading Intervention (SERI) | 2015–2020 | India | Room to Read | US Agency for International Development (USAID) |

| Education Quality Improvement Programme (EQUIP-T) | 2014–2020 | Tanzania | Cambridge Education | UK Department for International Development (DFID) |

| Partnership for Education: Learning | 2014–2020 | Ghana | FHI 360 | USAID |

| Tusome Early Grade Reading Activity | 2015–2020 | Kenya | RTI International i | USAID |

| Pakistan Reading Project (PRP) | 2013–2020 | Pakistan | International Rescue Committee (IRC) | USAID |

| Read India | 2012– | India | Pratham | Multiple |

| Lecture pour Tous (LPT) | 2016–2021 | Senegal | Chemonics | USAID |

| Northern Education Initiative Plus (NEI+) | 2015–2020 | Nigeria | Creative Associates | USAID |

i Full disclosure: Tusome is implemented by RTI International, and RTI is also leading the Learning @ Scale research. CGD and RTI have developed processes to ensure the research remains rigorous.

A few reflections

Undertaking this selection process and noting commonalities across the programs has led to some reflections on the state of the field of large-scale program implementation.

Evaluation of government-led programming. We were concerned that the final list included no programs implemented by governments alone. This doesn’t mean that there are no exciting government program interventions that are worth studying. It does mean, however, that these programs are seldom rigorously evaluated, which was an important part of our selection criteria. How can the sector encourage governments engaged in these interventions to conduct more rigorous evaluations?

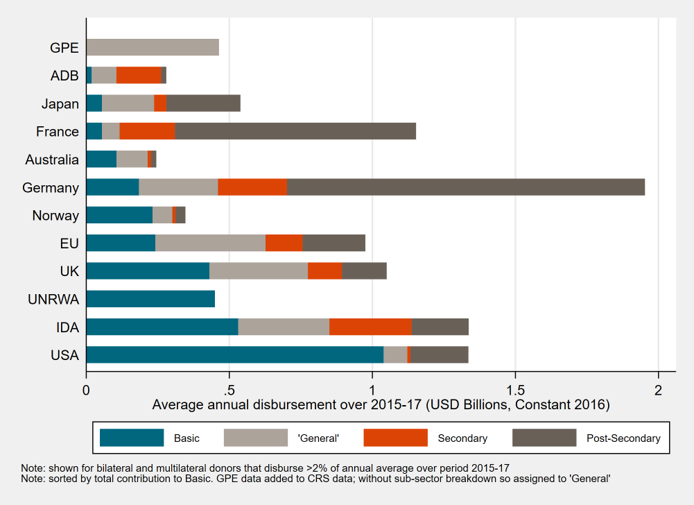

Donors’ focus on improving learning. It was notable that this list is dominated by USAID. USAID has been the largest investor in improving learning at scale for several years, but they are not alone, as figure 1 below shows. DFID had one program in the list, but several donors from which we expected to have examples did not. UNICEF does fund Room to Read’s efforts in other states in India, but we spent significant effort to identify large-scale programs from several other prominent large-scale donors in the sector and still did not capture any of their interventions on this list. Several large-scale donors simply had no candidate programs that had both real scale and rigorous evaluations of learning outcomes.

Figure 1. Donor expenditure on education

Source: CGD analysis of OECD CRD data

The problem is scale. We found many programs that had an impact on learning but weren’t operating at large (or even medium) scale. We cannot assume that such programs would work at scale, and in fact, the evidence suggests that many of them do not have implementation designs that consider the realities of government systems at scale. In addition to assessing effectiveness in pilot studies, programs also need to invest more at the design stage to test whether and how they will work within government systems.

Literacy, yes, but also other areas. It is indeed possible to improve learning outcomes at scale in literacy. That said, we would like to know more about what happens in other subjects and in other parts of the primary or secondary systems. We welcome the opportunity to learn more about early literacy, but it is notable how few large-scale programs focused on other subject areas or on levels of the education system beyond the early grades.

Research designs to estimate impact at scale need more investment. We did not see a wide variety of randomized designs or other methods to identify causal impact. It is an important part of how our field learns to have a range of rigorous methods in place to estimate impact.

The next step in the Learning @ Scale study is for us to undertake data collection in the field on these successful large-scale interventions. That effort began in February 2020 and will continue for the next several months. We are very interested in finding out what these programs do in classrooms, how they support teachers, and how they interact with government systems in order to ensure large-scale impact on learning. Look for updates on our research on these activities about what is working in these programs, including a report on the Learning @ Scale study later this year.

To return to where we began, improving learning at scale is very hard, but we are excited to discover how these eight programs did it and to help others learn from them.

For a list of funders for CGD’s education work, see www.cgdev.org/section/funding

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.