Recommended

Management clearly matters for school quality, but the jury is still out on whether programs designed to improve school management actually work. Studies find mixed results, with many finding no effects.

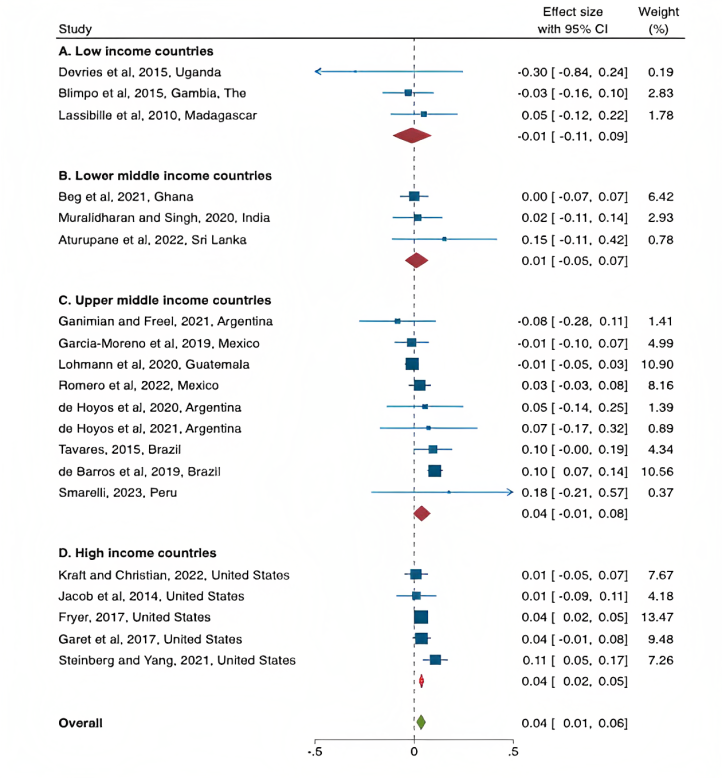

In a new systematic review and meta-analysis, we compile the evidence on school management training programs. After combing through more than 2,500 studies, we found 20 experimental or quasi-experimental evaluations of school leader training which reported student learning outcomes. The key advantage of the meta-analysis is that we improve our ability to measure small effect sizes by pooling studies. Across 20 studies, we find an overall small effect size of 0.03 - 0.04 standard deviations. This effect is so small that many of the individual studies didn’t have a big enough sample size to be able to detect them.

But should we even care about effects this small? The short answer is yes. Whilst the effect is small per child, it applies to every child in the school. And you’re only paying to train one person: the school leader. This means that these programs can still be cost-effective compared to more expensive programs.

Why are effects so small? We find three common barriers to program effectiveness: low take-up by school leaders, lack of incentives or structure for implementing recommendations, and the lengthy causal chain from management practices to student learning. We expect management to improve student learning indirectly, through a change in teaching practice, which will take time to see. And we see this in the data - the longer you wait to measure outcomes, the larger effect you see.

We also find considerable variation in the effectiveness of different interventions and contexts. Programs in richer countries are more effective than those in poorer countries. Overall we use 39 estimates from 20 studies, of which 5 are from the US, 9 from upper-middle income countries, and the rest from low- and lower-middle income countries. Other easily quantified features of programs don’t seem to make so much difference, such as the number of days of training offered, or the number of schools included in the program. There is still much more to learn about what precise combination of training, coaching, mentoring, peer learning, performance incentives, and school inspections matters the most (we hope to learn more about this from an RCT in progress in India).

Figure 1. Meta-analysis results by country income

Note: Studies are grouped according to the current World Bank country income classification of their setting. Squares indicate study effect sizes and solid horizontal lines indicate 95 percent confidence intervals. Square size is proportional to study weight, which is estimated based on the precision of the estimate. Red diamonds indicate sub-group mean effects, and the green diamond indicates the overall mean effect. Effect sizes and standard errors for each study are both calculated as the mean of individual estimates across different subjects and time periods within each study. This approach is conservative in assuming perfect correlation between estimates within each study, and so providing no increase in precision or weight for studies with multiple estimates.

Are these results “externally valid”? Do well-run pilots by NGOs and academics reflect the kind of programs that are run by governments at scale? To answer this question we survey practitioners responsible for implementing at-scale programs. The programs with rigorous evaluations do tend to be smaller and cheaper than larger scale programs, but they have a similar length and staffing ratios. Evaluated programs, and at-scale programs run by NGOs, are more likely to focus on “high-quality practices.”

In addition to reviewing programs to improve management, we also review data on the characteristics and responsibilities of school leaders in lower-middle-income countries. Using data from large-scale multi-country surveys, we show that school leaders in poorer countries tend to have less autonomy, education, training, and experience than their counterparts in high-income countries.

We conclude with some implications and directions for future research. We need more evidence on different design features - what kind of training works best. Few school management training studies report costs, making it difficult to calculate cost effectiveness. The headline finding of a small average effect size should be daunting for researchers, including ourselves, because it's hard to find such small effects without very large samples. This matters because these effects might still be meaningful. We also need more evidence on other outcomes besides test scores;- school leaders also play a key role in setting school culture and ensuring student safety. We have much more to learn about other dimensions of quality.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.

Image credit for social media/web: Adobe Stock