Recommended

WORKING PAPERS

Each year over two million secondary-school students across Nigeria, Ghana, Sierra Leone, Liberia and The Gambia sit coordinated tests known as the West African Senior School Certificate Examinations (WASSCE). The 2020 results are particularly important for Ghana’s president Nana Akufo-Addo, as they reflect the performance of the first beneficiaries of his government’s Free Senior High School policy. Politicians have begun to use 2020’s stellar achievements to pass judgment on that policy. But what if the exam results are not, in fact, comparable over time?

The WASSCE—as with similar exams used in a majority of African nations and many other countries around the world—serves two purposes. Exam results determine higher education admissions and job placements for students and so, to some degree the test must be unpredictable. At the same time, the exam is designed to maintain consistent standards for educational systems, which requires comparability over time. Theory suggests that these two mandates are in tension. Comparability generates predictability, gaming, and teaching to the test, all of which undermine the reliability of the exam for allocating higher education admissions and jobs.

In a new CGD working paper, undertaken by researchers from CGD and IEPA-Ghana, we look at English and maths papers in West Africa’s leading high-stakes exams and show that they can vary significantly in difficulty from year-to-year. If exams are not comparable over time then this has implications for countries that rely on results as they make education policy and for fairness for the candidates who sit them.

Pass rates on some of West Africa’s exams vary by over 30 percentage points across years

WASSCE exam results can fluctuate wildly from year to year, as has been documented for mathematics, science, social studies and English. While WAEC, the council that coordinates the WASSCE exam, purports to maintain fixed, absolute competency standards for its exams, actual pass rates on the core mathematics test in Ghana, for instance, swung from 82 percent in 2012, down to 54 percent in 2015, and back up to 87 percent in 2020.

The government’s position seems to be, in part, that these large and irregular swings are simply due to changes in student preparation. That seems unlikely, but it’s hard to disprove because each year a new population of students sits a new exam, and for security reasons exam questions cannot be repeated. So when you compare exam results across years, you’re comparing different students answering different questions, unable to disentangle what might explain the swings: changes in student preparedness, changes in exam difficulty, cheating, etc.

An easy way to check if one exam is harder than another: have students take both tests

In our new working paper, we attempt to overcome this problem and provide evidence on large variation in the difficulty of West Africa’s exams over time. To do so, we gathered all the core mathematics and English language exams administered since 2011, constructed an item bank containing every item on those exams and administered a hybrid test to 4,380 Ghanaian secondary school students. By bringing a single group of students to face items from several years, on the same day, we can isolate differences in exam difficulty from one year to the next.

Results from our experiment show that changes in mathematics exam difficulty explain almost all year-on-year swings in pass rates

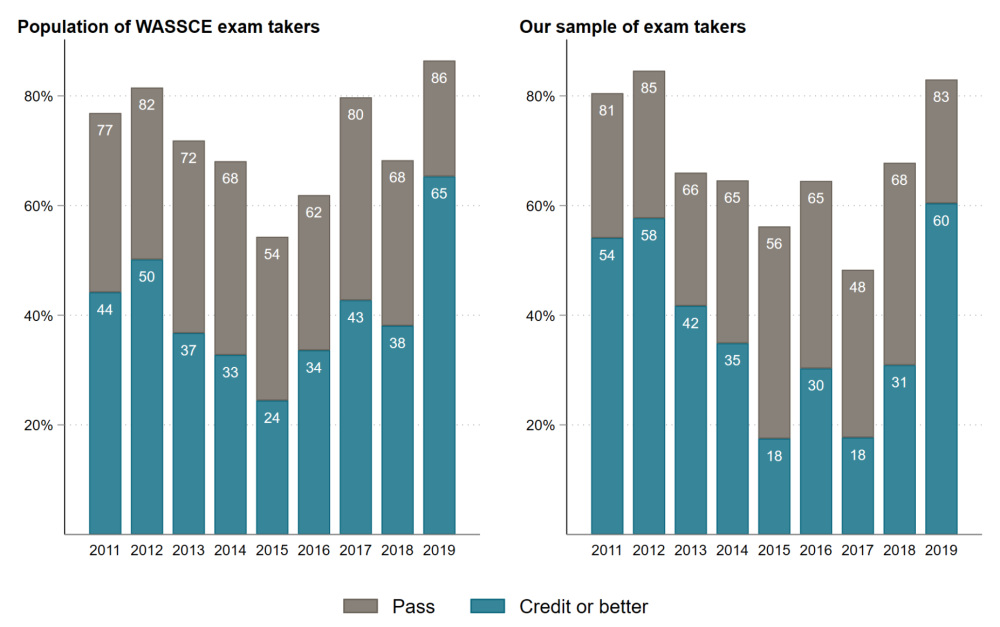

We show that scores across core mathematics items drawn from different exam years closely track fluctuations in Ghana’s national pass rates over time. Figure 1 shows actual WAEC results alongside our difficulty-based estimates, which represent nothing other than differences in difficulty: it’s as if an identical group of students were to sit every test from every exam year.

Figure 1. It’s the exam that changed, not the students

The same students sitting all the exams on the same day reproduce the annual variation in national pass rates

Note: Credit or better = WASSCE grades A1-C6; Pass = WASSCE grades D7-E8

Our estimates track the early increase in “Pass” and “Credit” awards to 2012, the downward trend to 2015, and the subsequent rise to 2019. We obtain very similar, although not identical, rank order across examination years. Most observed fluctuations appear to be spurious, with differences in test difficulty explaining a majority of the variance in passes awarded. Among the nine years, 2017 stands out. Our results indicate a relatively difficult test, in contrast with grades awarded that year.

Our analysis is not without limitations. We cover a majority of information from terminal exams, but cannot account for Continuous Assessment and other information that WAEC uses to award grades. Even though we can't directly estimate the impact of each part of the grade award process, our findings suggest that this is likely to have only a minor influence on grade awards.

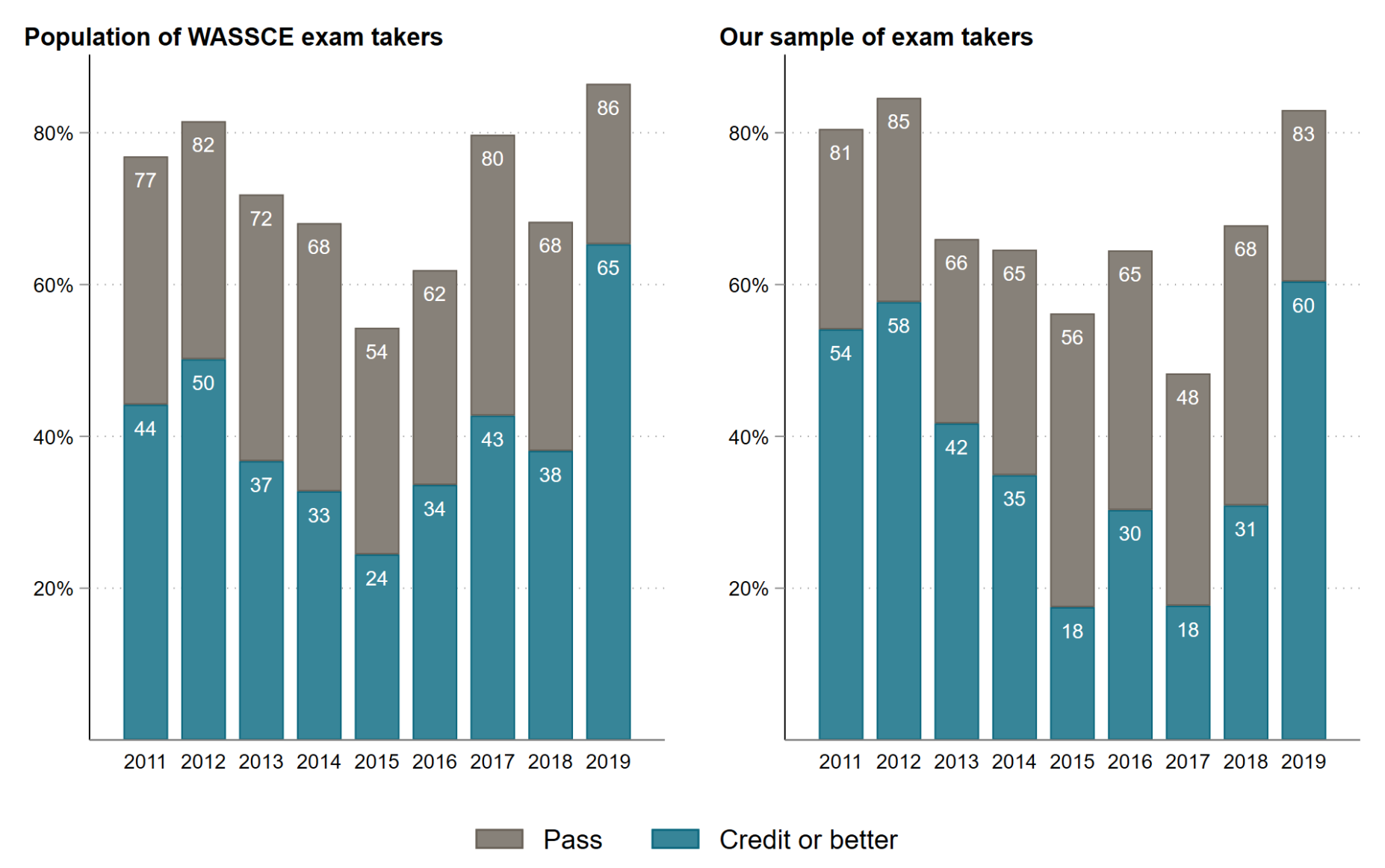

Using these data we can also look at the real trend in core mathematics performance over time. In contrast with the dramatic fluctuations that the public and policymakers debate each year, we find only a modest change in performance since 2011. That said, at the ‘Credit’ (A1 to C6) level there does appear to have been a consistent and quite sizable improvement since 2011.

Figure 2. Real trends in student performance are pretty flat

Once we adjust for differences in exam difficulty, the volatility disappears

We conclude that large, year-to-year fluctuations in grade awards in core mathematics are unlikely to be either the consequence of real shocks (e.g., recessions or teacher strikes) or short-term effects of policy reforms, nor are they evidence of cheating by exam takers as often discussed in the media. Rather, the reason for wildly divergent results across years stems from inconsistencies in how test papers—and the standardisation processes that have grown around them—are designed and implemented.

An unreliable exam has big implications for fairness and efficiency, and may require more than minor tweaks to fix

This paper raises questions for policymakers about how to measure student achievement for high-stakes decisions and, at the same time, how to monitor progress in national education systems. In their study of secondary school scholarships in Ghana, Esther Duflo, Pascaline Dupas, and Michael Kremer report that seven in ten eligible students (81 percent of women and 65 percent of men) expect to be a government employee or in a profession dominated by government employees if they complete Senior High School. An unreliable exam has obvious implications for fairness in allocating university places and into these stable and well-paid jobs. And it fails to serve its purpose in monitoring the national education system—the tests have inaccurately given the impression of a widespread cheating crisis or that reforms or shocks have had major effects, when the true picture is of a slow upward trend in student performance, at least for core mathematics. Without reliable, comparable test scores we're left in the dark about the effectiveness of education policies, like Ghana's recent rollout of free universal secondary school.

Several technical fixes could partially resolve the problems identified in this study, but these fail to address the underlying challenge of using a single high-stakes assessment for both screening pupils and maintaining consistent educational standards. It may be preferable for countries to develop separate assessments that are designed specifically for each measurement task. One approach could be a “no-stakes” national learning assessment that is statistically linked and used to monitor system performance, alongside (but separate from) an annual exit exam for students that can facilitate university and employment decisions but with no need to be comparable over time.

In a recent meeting of WAEC’s governing board, members called for a forum to be created for regular collaboration among the ministers of education to tackle educational development in the region. As a first agenda item, this forum could do far worse than to find ways of using the wealth of data and knowledge held by WAEC to take this research agenda forward across member countries.

And as far as Ghana is concerned, the move to abolish secondary school fees and bring hundreds of thousands of additional kids into the school system is an historic achievement. It would be nice if those kids faced a fairer exam at the end.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.