Last year, PEPFAR submitted guidelines which encouraged country staff to submit a proposal to conduct an “impact evaluation” (IE) as part of their annual Country Operation Plan (COP). Subsequently, they received only four submissions, of which three were funded. But they lso learned that many PEPFAR staff – who are mostly program implementers, or the managers of program implementers – didn’t fully understand what they were being asked to do; what does PEPFAR mean by “impact evaluations”?

In response, PEPFAR has started to conduct impact evaluation workshops in order to support the in-country teams who want to include an impact evaluation proposal in their March 2014 COP submission. I recently had the pleasure of serving on the faculty of the first impact evaluation workshop in Harare, Zimbabwe.

What was it like for a think-tank guy like me to be involved in this exercise? It was interesting, exhilarating and pretty hard work.

Small teams of program staff came from South Africa, Tanzania, Uganda and Zambia (Zimbabwe sent a few observers). Each team included representatives from the government, from PEPFAR and from a PEPFAR implementing partner (PEPFAR-speak for a local or international contractor). Unlike the participants at most of the donor-funded workshops I have attended over the last three decades, these were extraordinarily engaged. They had all come with one or more candidate research questions, they all worked to fill out a research template, and they all attended almost every session from morning until night; often working late into the evening.

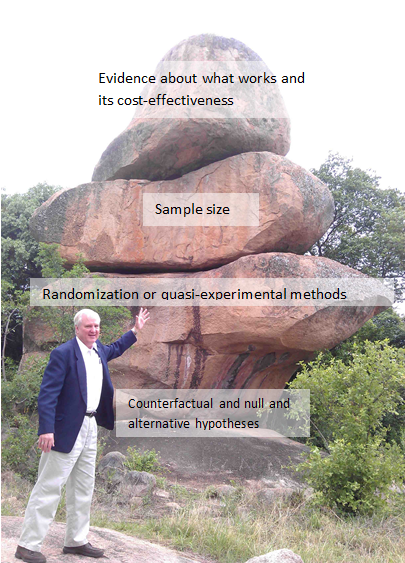

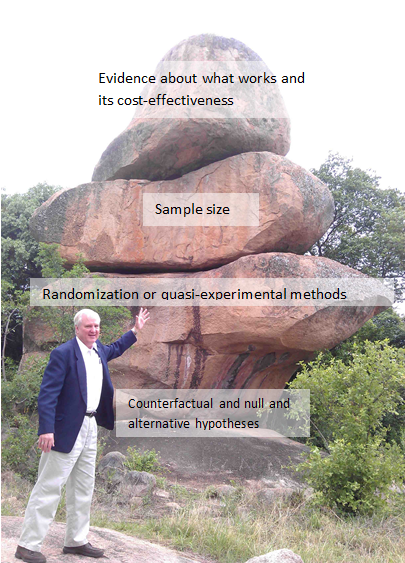

Because the concepts of implementation science and impact evaluation were fairly new to the participants, the faculty introduced the ideas from the ground up. What is a counterfactual? What is so special about randomization? If you can’t randomize, how can you construct a credible counterfactual? What is a null hypothesis, and why do we want to reject it? What is statistical power and why should we let it determine our sample size – and therefore the cost of our study? What is equipoise and why is it ethical? What is a discount rate and how does it affect a cost-effectiveness analysis? Propensity score matching. Discontinuity designs? Internal and external validity? Phew.

The 27 participants all had heard of many of these ideas, but few had heard of all of them. And now they needed to choose the most relevant of these for their country context and put them together in order to come up with a study design that would pass muster, first, with their constituencies back in Johannesburg, Dar es Salaam, Kampala and Lusaka, and then next March with OGAC’s Office of Research and Science.

On the way to the airport, I passed by Harare’s famous “balancing rocks.” They remind me of the task facing these PEPFAR teams. Internally valid evidence, balanced on data from a sample, balanced on the choice of an experimental or quasi-experimental method, balanced on the foundation: choice of a policy relevant counterfactual and pair of null and alternative hypotheses. The fact that the in-country teams are designing and overseeing the execution of this learning process assures that, if they carry them they will learn a lot about what makes HIV/AIDS programs work or not work. We should be seeing these studies contracted and launched within a year or so. For the sake of all the HIV infections they might avert and all the patients they might help, let's hope so.

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise.

CGD is a nonpartisan, independent organization and does not take institutional positions.