Zika’s rapid spread has focused media attention on how poorly prepared both rich and less rich countries are for infectious disease outbreaks. And while it seems that we are still flailing, in fact, the international community has been trying to do better for a while. Perhaps the most significant response came in 2014 when the G7 (including the US Government) endorsed the Global Health Security Agenda (GHSA), a partnership of governments and international organizations aiming to accelerate achievement of the core outbreak preparedness and response capacities required by the International Health Regulations.

GHSA assessments: a solid foundation

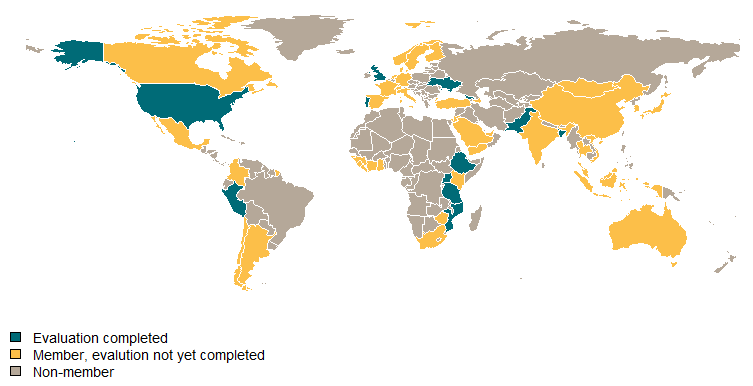

Among these core capacities is the ability of countries to quickly and accurately detect and respond to infectious disease threats. GHSA kicked off its work with country-level assessments, essential to understand where countries are on preparedness and what they need to improve. The assessments—known as Joint External Evaluations (JEE)—are also conducted with thought to a government’s incentives to be fully accurate. Using the JEE tool, governments assess themselves followed by an independent international validation of that self-reported data; the resulting reports are posted online. The GHSA/JEE process now involves 53 countries and 12 completed evaluations.

While the assessment idea and its process are much needed steps, more needs to be done. JEE measures and scores are not objective or quantifiable, and therefore cannot be easily tracked or replicated. Remedial actions supported by GHSA are not clearly linked to delivering improvements in JEE results. JEE are still not conducted in many countries, and funding for JEE-suggested remediation in low-income countries still seems improvised and inadequate.

If we are serious about preventing the spread of emerging infectious diseases and avoiding continued “surprises” like Ebola and Zika, generating hard data on system performance—not just a rough qualitative approximation—is key to making this global effort successful.

Measuring better

Early detection of outbreaks is an important tool in controlling and limiting epidemics. Accordingly, the GHSA includes 11 Action Packages (priority technical areas) for assessment and improvement. The measures included in the Real-Time Surveillance and Reporting Action Packages are listed below:

| Real-time Surveillance | Indicator and event-based surveillance systems | |

| Inter-operable, interconnected, electronic real-time reporting systems | ||

| Analysis of surveillance data | ||

|

Syndromic surveillance systems |

||

| Reporting | System for efficient reporting to WHO, FAO, and OIE | |

| Reporting network and protocols in country | ||

The JEE asks assessors to score countries on each of the dimensions in the table (as well as other technical areas) with scores from 1 (the worst) to 5 (the best). The numeric score is supplemented with a description of the underlying strengths and weaknesses.

But neither the scores nor the detailed descriptions are based on quantifiable metrics that could be used to both describe the current state and create accountability in improvement efforts. For example, the Bangladesh report includes extremely vague statements of capacity strengths (“Syndromic-based surveillance systems exist . . . which is a demonstration of the existing capacity”) and challenges (“the system remains somewhat fragmented”). These ambiguous descriptors offer little insight into the real strength of the existing system and the extent of required improvements. Part of the problem is that the JEE relies on the IHR Core Capacity Monitoring Framework as a source text, and here we are stuck with indicators like “timely reporting from 80 percent of reporting units” where timely can be defined by each country, or whether “a unit responsible for . . . surveillance has been identified” and the answer can be yes or no.

Instead of subjective scores, vague descriptions, and binary classifications, clearer measures of the actual functionality and outcomes of the surveillance system as a whole are needed. For example (and likely explaining how Zika spread for so long without adequate response), an evaluation of Brazil’s Notifiable Disease Information System (SINAN) examined the number of underreports and misidentified cases of dengue, finding that the system captured just one in 12 cases on average (and fewer than one in 17 during low transmission periods). Flawed identification in dengue surveillance systems was also found in Nicaragua, Thailand, and Cambodia, using similar measures.[1]

Objectively quantifying the performance of surveillance and response systems is possible, and there’s real room for the GHSA to improve. For instance, in the case of syndromic surveillance systems, IHR 2005 (page 43) provides the following criteria for evaluation: (i) describe how many sites participate in each surveillance system and (ii) describe how data is validated. Instead, the JEE could adopt more meaningful metrics such as underreports based on population surveys, misidentified cases based on chart audits, or population coverage of current surveillance sites.

Measuring better also matters for meaningful outcomes

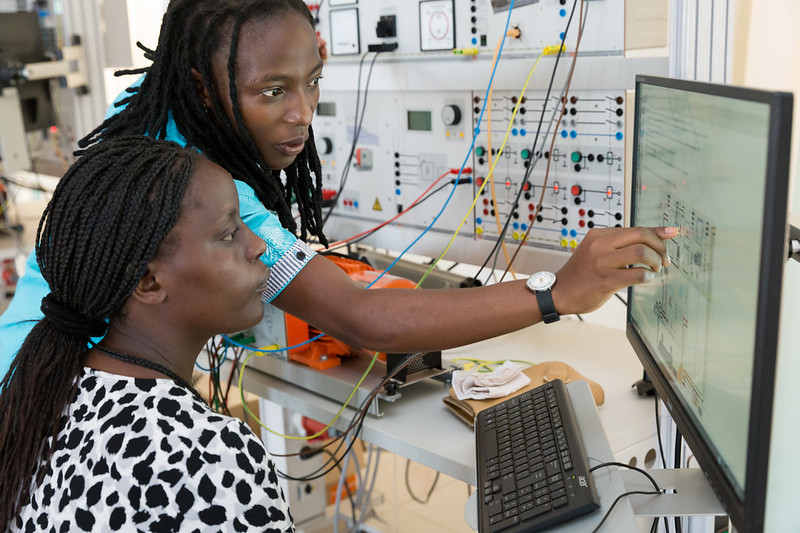

GHSA describes a number of success stories based on investments made in response to evaluations. For instance, in Ethiopia, a project is highlighted where 11 engineers were trained to test and maintain lab biosafety equipment with support from the US Centers for Disease Control and others. Under the heading of “investment gets results,” the GHSA website states:

Ethiopia’s investment in these and other improvements is paying off: between 2000 and 2012, annual deaths from HIV/AIDS fell from 132 to 55 per 100,000 people. Similarly, between 2000 and 2013, annual TB deaths (among the HIV-negative) fell from 102 to 32 per 100,000 people, and annual malaria deaths fell from 42 to 16 per 100,000 people.

Linking training in the use of biosafety equipment to outcome metrics like cause-specific mortality rates is absurd. The focus should instead be on more proximal results, such as occupational exposures and the like, ideally also measured in the JEE itself. There is a set of indicators of lab biosafety “core capacities” described in the IHR, but the reality is that they are not particularly clean measures and there seem to be better options for standards that might actually be measurable, as in Canada or in this study. Admittedly, lab safety standards are not as clear as one might like. The CDC writes that in the United States, “Laboratory safety cannot be achieved by a single set of standards or methods.” However, there must be something better than the metrics currently on offer.

New priorities: adding more countries and more money

During a regional outbreak like Zika, regional surveillance and response capacity affects the ability of any individual country to mount an effective response. But many countries and broad geographic regions remain outside the GHSA partnership, including Brazil and most other Central and South American countries most recently involved in the Zika outbreak. Reducing vulnerabilities created through weak regional participation should be an explicit priority beyond country-specific improvement plans. I’ve (Amanda) written before about the need to create clearer financial and reputation incentives for measurable progress on the implementation of the GHSA—but it’s going to cost money.

Strengthening the GHSA in the next administration

As the UN General Assembly closed its meeting on the related topic of antimicrobial resistance (AMR) last week, the gap between rhetoric and reality on GHSA and AMR grows. After so many independent assessments of the Ebola response and a broad consensus on what needs to get done, it is disappointing to see that our organizational and measurement framework for response still drags. There are plenty of meetings, but there is still too little hard data on which to act, too little money with which to respond. A new US Administration should seek to strengthen the GHSA organizationally and financially, assuring a coherent response, and use the same instruments to track and mitigate AMR.

[1] And this isn’t just an issue in surveillance systems. In an evaluation of European country preparedness for pandemic influenza, only 5 of 30 total countries had antiviral stockpiles reserved specifically for each containment, let alone a sufficient stockpile for the population size.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.