Recommended

WORKING PAPERS

The ‘Learning Adjusted Year of Schooling’ (LAYS) concept, introduced last year by the World Bank, seeks to combine access and learning outcomes into a single measure, allowing funders to compare directly across different kinds of interventions.

A year on and the World Bank continues to back the metric through the Human Capital Project; bilateral donors have begun to apply LAYS in comparing value-for-money across projects; and now a philanthropic foundation is leading a charge to get LAYS adopted as the predominant measure for education.

We like the idea and applaud innovation in measurement, but think LAYS still has some way to go before it’s really ready to be used as a robust measure by funders.

A leading outcome measure in education sounds sensible

LAYS combines quantity (years of schooling) and quality (how much kids know at a given grade level) into a single summary measure of human capital in a society. In the most basic formulation:

LAYS = Average years of schooling × Test scores

The average years of schooling attained by a child in a country is adjusted for how much they will have learnt on average, relative to an ‘advanced’ benchmark of learning such as the TIMSS and PIRLS assessments.

As a ‘macro-measure’ it allows cross-country comparison and improves incentives for policymakers, who could raise scores by providing more schooling or offering schooling of a higher quality. This sounds sensible—the SDG for education has 10 targets and 11 indicators which makes it difficult for ministries of education and development partners to track progress and set priorities.

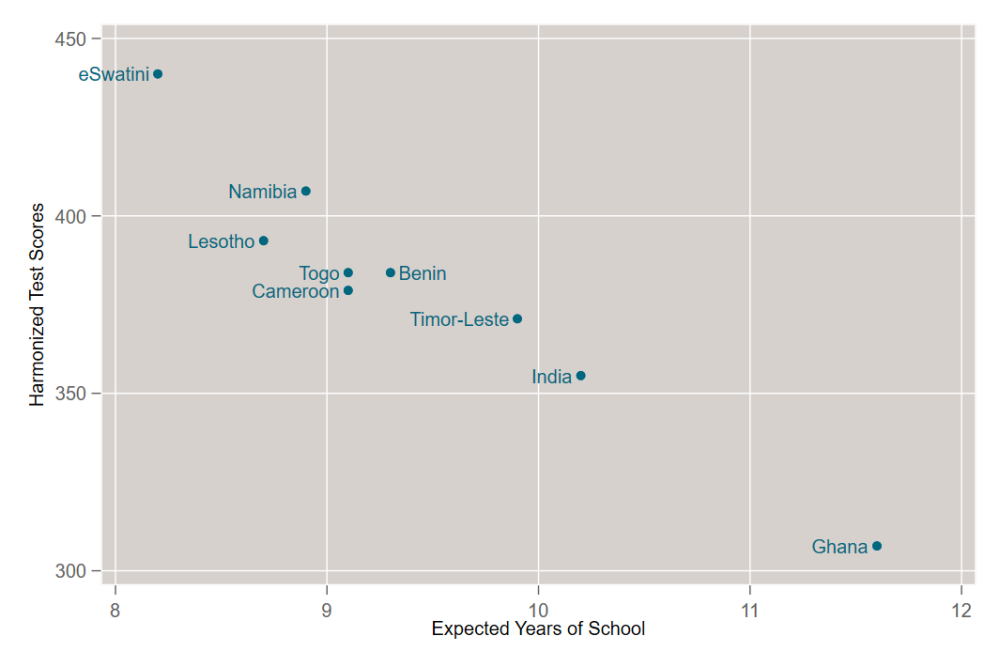

LAYS allows for useful conversations with policymakers about the state of an education system compared with others. For example eSwatini and Ghana both have around 5.5 LAYS, but this is achieved in very different ways (see Figure 1). Children in eSwatini get 8 years of education of reasonable quality, whereas children in Ghana get almost 12 years of education but of much worse average quality.

Figure 1: Countries with similar LAYS scores (between 5.5 and 5.9) achieve them through very different combinations of schooling and learning

Source: World Bank Human Capital Index.

But there are issues to resolve

In thinking through how to apply LAYS as a macro-measure (e.g. for cross-country comparison) or as a micro-measure (e.g. for program evaluation), we see three areas where research progress offers the highest return.

1. LAYS assumes an exchange rate between test scores and years of schooling, which needs to be substantiated

The idea that education affects welfare mostly via test scores is a reasonable hypothesis, but one that is overwhelmingly rejected by data. The non-pecuniary returns to education—child health, delayed pregnancy and marriage, democratic participation and so on—are substantial in education systems that otherwise perform dismally on test scores (e.g. the positive impacts of secondary schooling in Ghana despite low gain in learning shown in Duflo, Dupas & Kremer 2017).

LAYS were developed as a measure of human capital, focused on the ‘productive’ purpose of education. But even from a narrow economic perspective, it isn’t clear that discounting years of schooling by how much is learned in those years makes sense.

There is a strong empirical literature around years of schooling (Mincerian regressions, determinants of graduation and school transition etc.), and while there is some evidence that levels of learning and skills in the adult population affect earnings and growth, after controlling for schooling, we are much weaker on what the ‘right’ exchange rate between learning and schooling is. LAYS imposes a single conversion despite huge variation in wage and non-pecuniary returns to schooling—and learning—across countries.

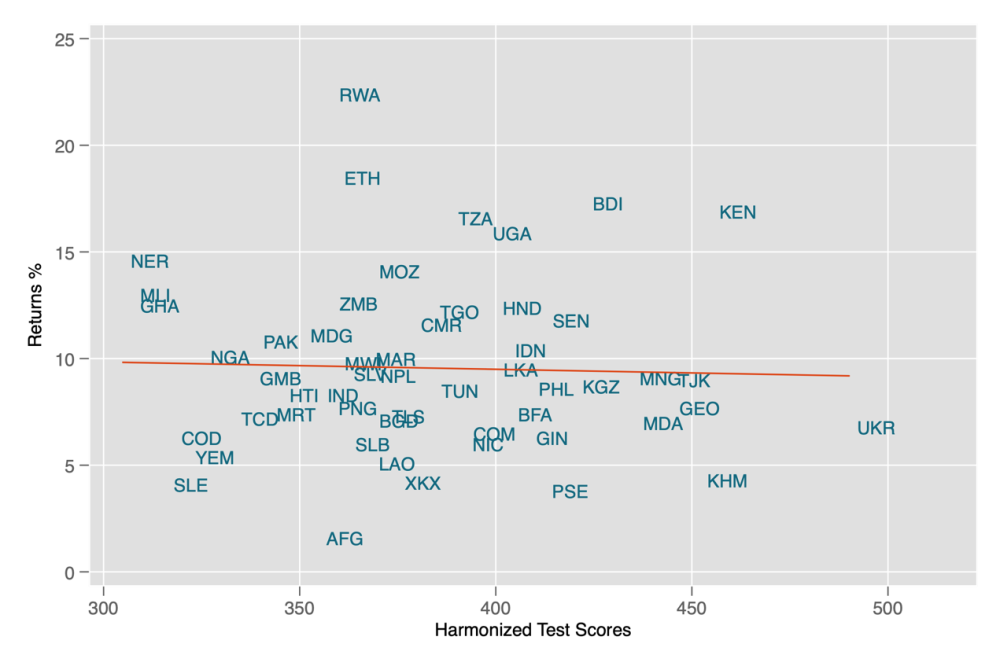

Looking across countries (Figure 2), there is precisely zero relationship between school quality (measured by the World Bank Harmonized Learning Outcomes) and the labour market rate of return on investment in schooling.

Figure 2. Returns to Education and Quality

Source: World Bank Harmonised Learning Outcome and Montenegro & Patrinos (2014), Low and lower-middle income countries only

Moreover, while policymakers and think tanks in Washington and London have decided that dismal learning outcomes constitute a crisis, it’s not clear that sentiment is shared by citizens in developing countries. In any application of LAYS, eliciting population preferences about education (in the same way that quality-adjusted life-year (QALY) surveys do for health, by assessing the value populations put on both length of life and quality of life) could help assess the right weighting of economic outcomes versus other outcomes.

2. LAYS requires comparable data on learning, which doesn’t (yet) exist

LAYS require a comparable and comprehensive measure of schooling and learning across countries. We have that for schooling: one year is comparable and is comprehensive. But we are not close to having that for learning.

Other than in high-income countries (which benefit from multiple rounds of PISA and TIMSS tests for comparison), we don’t have a meaningful scale on which to measure test scores across large numbers of countries or time periods. This may be solvable in theory, but certainly not in practice anytime in the short- to medium-term.

This presents a challenge to any serious application of ‘macro-LAYS’ in a development setting. In a first effort, last year the World Bank released a global dataset on education quality that includes Harmonized Learning Outcomes (HLO) for 164 countries. ‘Exchange rates’ are used to transform scores from international standardized tests (PISA, TIMMS, PASEC, LLECE, etc.) onto a single scale, via countries that have data from more than one assessment. A single HLO was then returned for each country, which was used to compute LAYS, which was in turn entered into the World Bank’s Human Capital Index.

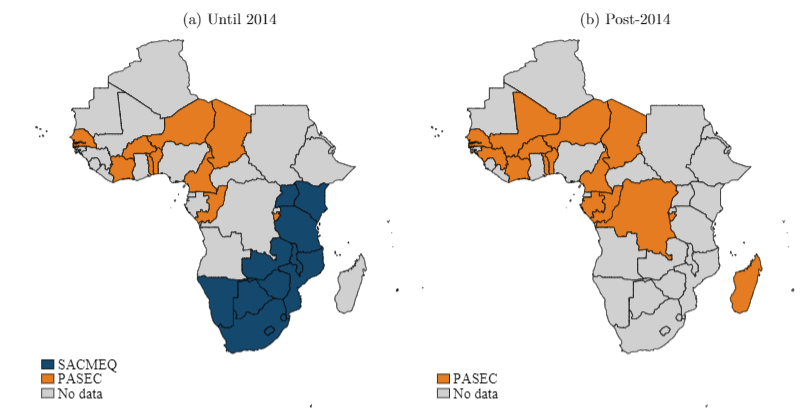

This approach requires a huge leap of faith. It combines tests that assess different constructs, taken by students of different ages and grades: PISA samples from all 15 year olds, whereas PASEC samples grade six students and EGRA typically samples students in grades two and three—a significant difference! The challenge is compounded by the fact that exchange rates between assessments were estimated using a limited number of countries and a winding route is required to convert scores from some regional assessments onto the global scale. To get from PASEC 2014 to an HLO score, for example, country scores are first transformed onto the 2006 PASEC scale—using Togo as the link country—then transformed onto the 2007 SACMEQ scale—using Mauritius as the link country—and then to a universal HLO score using Botswana, which sat for PIRLS/TIMSS in 2011, and allows the final connection to be made.

Although this is not a ‘fault’ of LAYS, a comparable measure of learning is central to any global application of the metric (alternatively, LAYS could be calculated for groups of countries that have been part of one assessment, but that is quite a bit less attractive).

New data reveal one of the pitfalls of the approach used to define LAYS in the Human Capital Index. The OECD’s PISA for Development (PISA-D) assessment tested 15-year-old children in seven Latin American and African countries. Results were published after the HLO release and reported on the PISA scale, which allowed us to use the PISA exchange rate to transform these scores onto the same HLO scale. The transformation to an HLO score changed the results considerably (Figure 3). Every country but Zambia changed rank order, and while estimated scores for Ecuador, Paraguay, and Honduras changed little, other countries saw substantial shifts: around 100 fewer points for Cambodia and more than 50 fewer points for Zambia and Senegal. This would be equivalent to a 1.0 to 1.5 LAYS reduction in those countries, overnight—all from the HLO conversion.

Figure 3: Comparison of PISA-D results with World Bank Harmonised Learning Outcome

Source: World Bank Harmonised Learning Outcome and PISA-D scores for all participating countries, ‘harmonised’ using exchange rate between 2015 PISA scores and their respective HLO scores.

3. Using LAYS to evaluate projects requires tests that are comprehensive and/or comparable

In theory, LAYS could also be used to compare project performance and become a single metric of success for cost-effectiveness analysis in the education sector. The methodology to compute `micro-LAYS’ is still in development but we foresee some specific challenges.

For micro-LAYS to be sound, the measures need to be comprehensive—in other words, they need to cover more than one subset of learning. For the purpose of evaluations, we are interested in measuring if the treatment group learns more than the control group but, in order to express impact in terms of LAYS, we need to transform this progress into equivalent years of schooling (that is the typical learning happening during one year of schooling) and the content of the test matters. Equivalent years of schooling can be computed using the progress observed in the control group but, if the test only measures a small subset of the total learning of a typical school year, progress in the control group might not give a fair estimate of an equivalent year of schooling.

Another way of expressing the test in terms of equivalent years of schooling is to design the test for the evaluation to be comparable with national or international assessments that give an indication of learning in a typical year. This is rarely the case and it is often difficult to express the impact of a project on a common global scale.

So, to use LAYS in the context of evaluations, the design of the tests we use for these evaluation need to change dramatically, and we will need to pay more attention to test content and comparability. This requires new tools for evaluators and a change in evaluation practices.

Applying LAYS to an access intervention: girls’ bursaries in Kenya and Malawi

Putting the challenge of comparable learning measures to one side, LAYS might be undesirable when comparing projects designed to increase access. Take girls’ bursaries (scholarships), for example. An identical project is implemented in Kenya and Malawi. Each has identical numbers of girls and identical impact, supporting every beneficiary to participate for two additional school years.

But in converting these programs into LAYS, the access impacts are discounted by each country’s learning level—which the program had no impact on, nor was that intended. Learning levels in Kenya are much higher than in Malawi, so the discount is far greater in Malawi. In any LAYS-based comparison of ‘what works,’ the two identical programs, with identical impact on the relevant dimension, present quite differently. The outcome is intended by LAYS—which would argue that the additional year in Kenya, with more learning, is more productive—but in this circumstance would it have been better to avoid an arbitrary adjustment for learning?

(Note (i) that a LAYS-maximising funder is going to prefer access programs in learning-rich countries—which might not be optimal—and (ii) that this example will change when programme cost is included, which might vary systematically with LAYS.)

LAYS is promising but significant developments are required before it can be considered the predominant measure for global education

LAYS is a promising measure for education, but we should not underestimate the methodological challenges ahead. It took 25 years for the health sector to develop QALYs, or quality-adjusted life-years, and there are still ongoing methodological refinements. To fully back LAYS as the predominant global measure for education, we would like to see a number of developments.

First, the combination of schooling and learning that is built into LAYS should be substantiated. Is it really the case that an additional year of school at full learning is worth the same as two years at half learning across all countries and outcomes? We need a better understanding of the wage and non-wage returns to schooling and skills in different countries to believe this. As we go down that route it might also begin to make sense for LAYS to represent different combinations of schooling and learning in different contexts (for different countries, for different grades completed etc.).

Second, since education is not only about learning, and since parents do not only value learning, the LAYS exercise prompts us to put more effort into understanding what citizens actually want the education system to do. It is unlikely that a single measure will be able to aggregate all preferences, so education policies and projects should not only be measured in terms of LAYS but also for their effect on other welfare outcomes.

Third, the weakest link in LAYS is the measure of learning—especially for low-income countries. Better global coordination is needed to obtain comparable and regular learning assessments for all countries in the world. Better learning measures would improve on the desk-based estimates of HLO and could help build countries’ national capacity, support better research, and help diffuse reforms that raise learning. Since the World Bank has just launched the Learning Poverty target, perhaps they’ll back some measurement that fills the gap between EGRA and PISA/TIMSS.

Fourth, for LAYS to work as a measure for impact evaluation, more comparable tests are needed. Impact estimates are sensitive to test construction, like floor and ceiling effects and the types of competencies tested. Comparing standard deviations across test scores is sensitive to differences in the underlying variance of learning levels in the sampled group. Existing measurement protocols typically do not allow researchers to characterize the extent of learning gains being made under ‘business as usual’ schooling in the education systems being studied. Our friends at JPAL are starting to address these issues by building a ‘world item bank’ of assessments with theoretical and psychometric compatibility, which can be used by researchers and implementers to enable accurate comparisons across countries and contexts of learning levels and of the effect sizes of different interventions. Once completed, that should make LAYS far more meaningful at the project level.

LAYS certainly holds promise, but it’s got quite some way to go before it can be considered a robust, single, composite measure for global education by funders and others. We hope the interest that LAYS has generated will mean more innovation and measurement and more support for initiatives like JPAL’s that are looking to address some of the conceptual and methodological challenges that currently exist in education measurement.

Thanks to John Rendel and the Peter Cundill Foundation for convening the discussion on LAYS, to Noam Angrist, Deon Filmer, and Justin Sandefur for their helpful comments, and to CGD global health programme for all their wisdom on QALYs.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.